Bringing together what belongs together: Matching voice commands and radar data

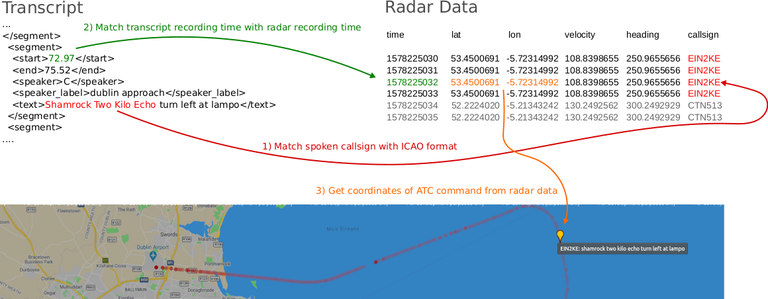

Air-Traffic Control (ATC) Radio and Automatic Dependent Surveillance-Broadcast data (ADS-B) provides complementary information. Listening to ATC radio gives insights into upcoming actions of the aircraft based on the information exchange between an Air-Traffic Controller (ATCo) and the pilot. The actual position of the aircraft at any point in time is provided by the radar data. ADS-B data. ADS-B contains an information such as latitude, longitude, altitude and velocity value of a plane at a current point in time. The ADS-B data is collected by Open-Sky server with a resolution of a few seconds and gives therefore a fine granular view on the trajectory of the plain. The matching of the radar data with the speech results therefore in an increase of information. Figure 1 shows the process of the matching, which can be broken down into 3 steps:

1) Matching the callsign in the automatically generated transcript (from voice command issued by ATCo) with the callsign in the radar data:

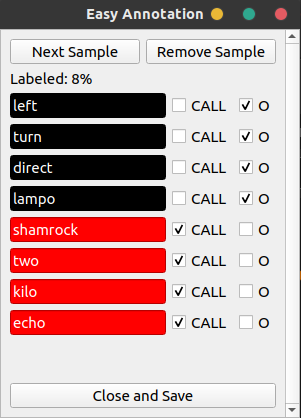

The voice is transcribed, either by human annotators or by an automatic speech recognition (see corresponding blogs such as here). Inside these transcripts, the callsigns are automatically detected. This detection can be refined manually by a human annotator with the tool displayed in Figure 2. The callsigns are then transformed into the ICAO format, which consists of 3 character ICAO designator followed by the fight ID consisting of letters and numbers. After the conversion, the matching procedure is followed between the callsigns of the radar data.

Figure 1: Matching process.

2) Matching the voice recording time with the timestamp extracted from the radar data:

To get the coordinates of the plane at the time, a voice command given by the ATCo, timestamp extracted from the voice command (i.e. from the the transcript) needs to be matched with the timestamp provided by the radar data which corresponds to the same callsign.

3) Getting the coordinates and combining the data:

When callsign and timestamp are matched between radar and voice command (transcript), the latitude and longitude of plane at the time can be extracted. The combined data can then be plotted. In the example from Figure 1, it is clearly visible that the plane turns left after the turn-left command is spoken.

Figure 2: Callsign detection refinement.

This alignment of the input data is the starting point for several downstream tasks, therefore it is crucial to minimize the misalignments at this stage of the data processing.

If you have questions or comments, fell free to contact us through the contact form.