Automatic speech recognition, how it works?

The principal goal of Automatic Speech Recognition (ASR) is to correctly recognize the sequence of words that corresponds to the ‘observed’ acoustic signal. As illustrated in figure 1, the input is a speech signal, while the output is the recognized text. The processing is subdivided into 3 stages:

Figure 1: Architecture of speech recognizer.

The purpose of feature extraction is to compress the waveform into a sequence of fixed-length vectors of low dimension. Usually, we extract one vector per a 10 ms step from 25 ms long chunks of speech signal, i.e. the speech frames. The encoding must preserve the information relevant for speech recognition and suppress the irrelevant information (e.g. differences across speakers, genders, microphones, channels etc.). The typical feature extraction is based on short term spectral analysis of the signal and processing is inspired by properties of human hearing. The most popular feature extraction methods for ASR are FBANKs (log-Mel filterbank energies, figure 2), MFCCs (Mel-Frequency Cepstral Coefficients) and PLPs (Perceptual Linear Prediction). For ATCO2 project, we use high resolution MFCCs and i-vectors [2] as features.

Figure 2: Example of FBANK features (2D-matrix). Each column is obtained from a spectrum of 25ms segment of audio signal around time ‘t’.

In Matching of acoustic units, we ‘convert’ the features into scores of a “closed set” of acoustic units. For illustration, one can think of phonemes as units. The acoustic scores are computed by an acoustic model, usually a Deep Neural Network (DNN). The acoustic models in general are trained on a set of speech recordings, the training is supervised by manual transcriptions. If we cannot have the manual transcriptions, we can generate them with an existing Speech-to-text recognition engine. Then, we train a new model on a mixture of manually and automatically transcribed data, while some form of “smart data-filtering” is possible.

Naturally, better models are obtained when more training data is used. For the training, we need to assign individual acoustic units to time-steps in features. This is not done manually, but by using a forced-alignment algorithm that maps the acoustic units in reference transcripts to the audio with some existing model. If there is no model yet available (beginning of the training), equal lengths of the feature vector sequence are assigned to all acoustic units in an utterance.

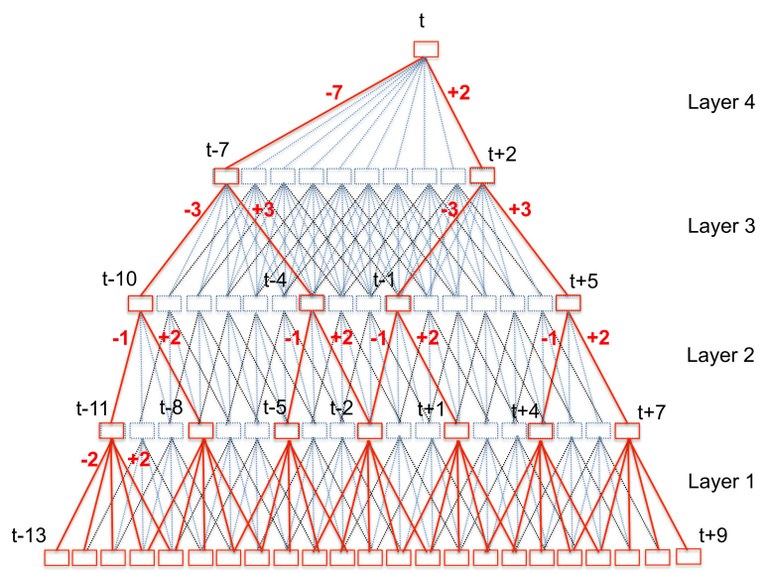

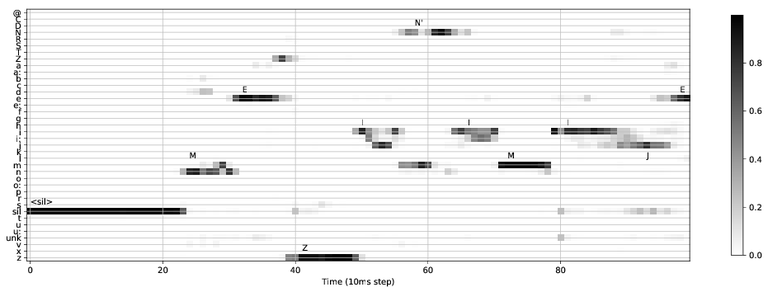

For ATCO2 project, we use TDNN-F acoustic model [3] (figure 3), which is a feed-forward neural network with semi-orthogonal neurons ( semi-orthogonal neurons: technically, the signals from neurons are softly pushed to be independent (i.e. orthogonal)), this increases the capacity for encoding 'useful' information in the narrow hidden layer (bottleneck)) in each of its bottlenecks. This acoustic model is trained with Lattice-Free MMI loss function [4]. The neural network acoustic model produces a matrix with acoustic scores (figure 4).

Figure 3: TDNN neural network. Note that the red arcs are “sparse”, while the output at time ‘t’ (on top) is produced from feature time-span t-13 .. t+9 (bottom).

Figure 4: Illustrative example of 2D matrix with acoustic scores from a neural network. The scores of “tied-states” are summed per-phoneme. Each column holds scores for all the acoustic units at time ‘t’, and the sum of probabilities in each column is 1.0.

In Decoding, we search for the most likely word sequence W’ that corresponds to the ‘observed’ sequence of columns in feature matrix X (figure 2). Alternatively, we can see it as a search for a best “zigzag path” proceeding in horizontal direction through the matrix of acoustic scores (figure 4). However, this search is for a good reason constrained by some prior information about the language (word pronunciations, language model scores).In practice, the decoding is done by a search in a huge graph – recognition network – of all the possible hypotheses, where we combine the scores from the acoustic model, language model and lexicon (dictionary). A typical decoding algorithm is based on two ideas: token passing and beam search:

Token passing, beam search: The idea of token passing is chronological advancement over input features (or acoustic scores) with fixed step-size. For the ‘current’ frame we have a stack of tokens with partial recognition paths. In the next frame, each token is expanded into many new tokens with the possible ‘extensions’ of the hypotheses. To avoid having too many tokens, some of them are discarded. The idea of beam search is that only the tokens with scores within some margin from the best token survive.

Search error: The beam search is a local and greedy heuristic. It makes the speech recognition fast enough for practical use. However, it can lead to a search error, if a more accurate path is discarded because its token locally ‘fell-out’ of the beam. Practically, the beam-width is the distance of log-scores from partial recognition hypotheses. We should make sure the beam is large enough, so that its further extension does not improve the recognition results.

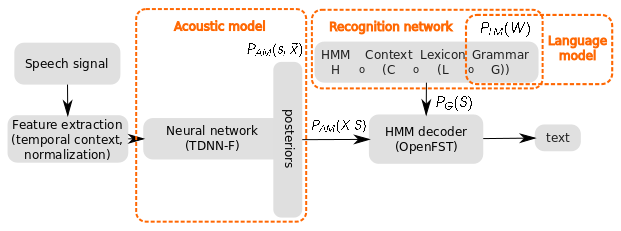

Recognition network: When decoding, the search space for the partial hypothesis is defined by the recognition network. The recognition network is a Weighted Finite-State Transducer (WFST) graph [5], which translates the time-steps over arcs in Hidden Markov Model (HMM) into words (N:1 mapping). The recognition network denoted as ‘H ◦ C ◦ L ◦ G’ is built by composition of 4 graphs that encode: language model (G), pronunciation lexicon (L), context-dependency of a phoneme (C) and HMM topology of a phoneme (H). Note that words that are not in the lexicon or language model cannot be recognized. Such words are missing from the recognition network. See https://kaldi-asr.org/doc/graph_recipe_test for more information about recognition networks.

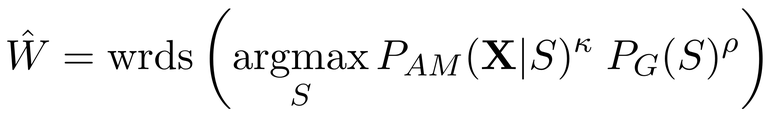

Decoding formula, overall scheme: For the “big-picture” illustration we present the decoding formula in figure 5 together with the overall scheme of our ‘hybrid recognizer’ in figure 4. The central component is the HMM decoder, which explores the search space defined by the Recognition network H ◦ C ◦ L ◦ G. Internally, the partial paths have costs computed from acoustic model scores PAM(X|S) and graph scores PG(S), where S is the hypothesized state-sequence of the partial path from the beginning of the utterance. In the decoding formula (figure 5) we see that we search for such state sequence S that has the maximal score, and we read the corresponding word sequence W’ by using the operator wrds(.). For optimal Word Error Rate (WER) performance, two hyper-parameters need to be identified, the acoustic scale κ and language-model scale ρ.

Figure 4: Overall scheme of Speech-to-text recognition engine.

Figure 5: Decoding formula.

After reading this document, you may have a basic idea of how the automatic speech recognition works. While writing this article, we have been aware that it’s not easy to address the broad spectrum of audience, such as in the ATCO2 project. Nevertheless we did our best to mention the most important concepts, while being as illustrative as possible. If you have any comments or questions, feel free to contact us at: iveselyk@fit.vutbr.cz

[1] Project Kaldi: http://kaldi-asr.org/

[2] George Saon, Hagen Soltau, David Nahamoo, and Michael Picheny. Speaker adaptation of neural network acoustic models using i-vectors. In Automatic Speech Recognition and Understanding (ASRU), 2013 IEEE Workshop on, pages 55–59. IEEE, 2013.

[3] Daniel Povey, Gaofeng Cheng, Yiming Wang, Ke Li, Hainan Xu, Mahsa Yarmohammadi, and Sanjeev Khudanpur. Semi-orthogonal low-rank matrix factorization for deep neural networks. In Interspeech 2018, 19th Annual Conference of the International Speech Communication Association, Hyderabad, India, 2-6 September 2018, pages 3743–3747, 2018.

[4] Daniel Povey, Vijayaditya Peddinti, Daniel Galvez, Pegah Ghahremani, Vimal Manohar, Xingyu Na, Yiming Wang, and Sanjeev Khudanpur. Purely sequence-trained neural networks for asr based on lattice-free mmi. In Proceedings of Interspeech, pages 2751–2755, 09 2016.

[5] Michael Riley, Cyril Allauzen, and Martin Jansche. Openfst: An open-source, weighted finite-state transducer library and its applications to speech and language. In Human Language Technologies: Conference of the North American Chapter of the Association of Computational Linguistics, Proceedings, May 31 - June 5, 2009, Boulder, Colorado, USA, Tutorial Abstracts, pages 9–10, 2009.