The annotation started

The ATCO2 project is aimed at the development of an automatic tool for air-traffic data transcription. The - automatic - means, that a speech communication between pilots and ATC will be transcribed using a machine into a textual form (including tagging of important parts like commands, call-signs, values, names, etc.) Next, a web interface (transcription platform) will be developed and fully available for enthusiasts to verify the automatic transcripts. So the output of the transcription platform is data -- lots of data available for the community, research and commerce. Having such data, one can build its own tool to process the speech data and get some useful information out of it.

To make this happen, we need several components:

1] Device for capturing and recording the voice data. ATCO2 project aims to keep the equipment as lightweight as possible to make sure that the receiver installation is easy for the users and at the same time hardware cost is low.

2] Automatic speech recognizers which pre-transcribe and tag the recorded voice data.

3] Transcription platform, where enthusiasts are involved. They help to verify and correct the pre-transcribed data. This process can be done in two ways:

- Either by correcting the transcripts,

- or just by checking if the automatic transcript is correct or not.

These transcripts will be used as a feedback loop for the automatic speech recognizers to improve themselves by the way.

However, to train a speech recognizer to automatically transcribe the ATC communication reasonably well, we need training and test data. The closer the training data is to the target domain (ATC communication), the better. The training and test data must be carefully transcribed by humans. We can use of course other transcribed data. We even have it. But this data is mostly out-of-domain (youtube videos, read books, telephone conversations, ...), too far from the ATC communications.

We started to record ATC data ad-hoc. The data is processed by voice activity detection (to keep only speech segments) and speaker segmentation (tries to guess which segments are spoken by the same person). These audio segments (~5 minutes long) are provided to a dozen of our annotators. They are the first enthusiasts and volunteers who came on board (thanks to our project partner, the OpenSky Network). These volunteers get the audio segments and listen to it and transcribe it. It is a difficult work, as they need to search for other sources to get the metadata (call signs, local names of runways, etc) so that the final transcription of controller-pilot communication is correct.

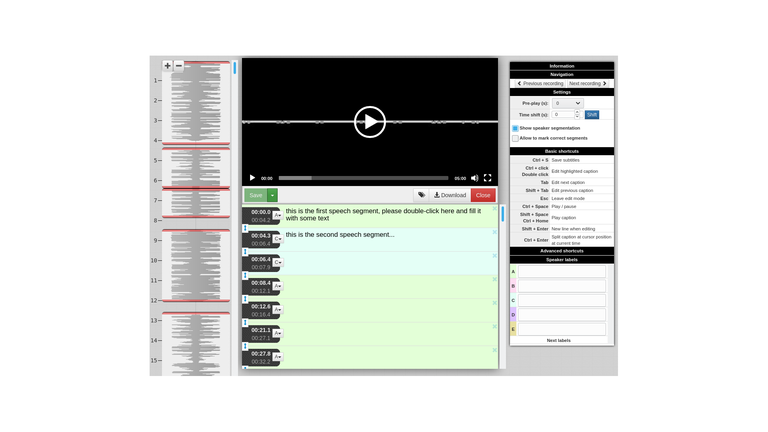

The process is shown in the figures below. The pre-transcriptions of the speech (in given figure):

are converted into these transcripts:

To make sure that the process of verification is uniform across different volunteers, the transcribers need to go through a 4 pages long annotation manual so that the speech is transcribed consistently.

The web interface you see is a transcription portal developed by for SpokenData.com service which we (ReplayWell - as the partner of the project) bring into the project. As you can see, the volunteers need to not only transcribe the speech (text in the middle of the picture), but also assign the identities of particular speech segments to the speakers (bottom right list - call signs, tower, etc). They have a waveform on the left side and they also have to check if the automatic segmentation is correct.

ReplayWell’s main goal is to redesign the SpokenData.com for ATCO2 project, to serve the volunteers and provide them all the important information, so they will have all the information on one web-page. And this is the second role of the first dozen of volunteers - provide us with the feedback. We need to understand the process of their transcription, what other data they need and make their life easier. At least, the transcriber’s life would be easier :)

At the time of publishing this blog post, volunteers already transcribed over 3 hours of audio resulting in one hour of speech. This is the perfect starting point to evaluate the automatic speech recognizers for ATCO2 project.

Thank you guys for doing that job! Very appreciated!

Igor@ReplayWell