Contextual adaptation for improving call sign recognition

Applying Automatic Speech Recognition (ASR) to the Air Traffic Control domain (ATC) is difficult due to factors like : noisy radio channels, foreign accents, cross-language code-switching, very fast speech rate, and also situation-dependent vocabulary with many infrequent words. All this combined leads to error rates that make it difficult to apply speech recognition.

For ATC ASR contextual adaptation is beneficial. For instance, we can use a list of airplanes that are nearby. From an airport identity, we can derive local waypoints, local geographical names, phrases in local language etc. It is important that the adaptation is dynamic, i.e. the adaptation snippets of text do change over time. And, the adaptation also has to be light-weight, so it should not require rebuilding the recognition network from scratch. We use the snippets of text by means of Weighted Finite State Transducer (WFST) composition.

HCLG boosting

We apply the on-the-fly boosting to the HCLG graph. The HCLG graph is the recognition network which defines the paths that the beam-search HMM decoder will be exploring. This graph contains costs that can be altered. We do this by WFST composition applied as:

HCLG’ = HCLG o B.

The composition is marked with operator ‘o’ and its algorithm is described in [1]. Informally, the output symbols of left operand are coupled (matched) with input symbols of right operand. The weights from both graphs are recombined in a way defined by the semi-ring of WFST weights. The result is a single graph having input symbols from left operand and output symbols of right operand. An example of boosting graph B is in Figure 1.

[1] Mehryar Mohri, Fernando Pereira, Michael Riley: Weighted finite-state transducers in speech recognition. Comput. Speech Lang. 16(1): 69-88 (2002)

[1] Keith B. Hall, Eunjoon Cho, Cyril Allauzen, Françoise Beaufays, Noah Coccaro, Kaisuke Nakajima, Michael Riley, Brian Roark, David Rybach, Linda Zhang: Composition-based on-the-fly rescoring for salient n-gram biasing. INTERSPEECH 2015: 1418-1422

[1] Mehryar Mohri, Fernando Pereira, Michael Riley: Weighted finite-state transducers in speech recognition. Comput. Speech Lang. 16(1): 69-88 (2002)

Figure 1. “Toy-example” topology of a WFST graph B for boosting the recognition network HCLG.

The boosting is done as composition: HCLG’ = HCLG o B, which introduces

the score discounts into the HCLG recognition network.

As you can see, we are boosting individual words. We cannot boost whole phrases, since such composition would require a lot of computation time. Also, we should not boost common words that are likely to be present in the lattice anyway. So, here we boost only ‘rare’ words like airline designators from callsigns (e.g. ‘air_berlin’). For the future, we think of boosting waypoints, local names and frequent phrases in local language.

Lattice boosting

In lattice boosting we have more freedom for designing the boosting graphs. The lattice is a relatively small graph compared to the HCLG graph, plus lattices are acyclic graphs. All this combined leads to faster runtimes of the composition operation. So, the boosting graph B can encode many word-sequences that obtain the score discount only if the whole word-sequence is matched in the lattice, when doing the WFST composition.

Similarly to previous section, the composition is done as:

L’ = L o B

where L is the input lattice, B is a boosting graph from Figure 2 and L’ is the output lattice with score discounts introduced by the composition.

Figure 2. A “toy-example” topology of a WFST graph B for boosting lattices (speech-to-text

output with alternative hypotheses). The boosting is done as composition: L’ = L o B,

which introduces score discounts for word-sequences that we decided to boost.

These word sequences represent the contextual information.

The lattice boosting is specific for each utterance, the composition is run in batch mode for a whole test-set. The toy-example in Figure 2 has a “lower part” with all the words in a lexicon in parallel; this makes sure no word sequence is dropped by the composition. There is also a phi symbol #0 on the ”entrance” to the lower part. The “upper part” encodes word sequences that we want to boost (e.g. call signs), the score discounts -4 or -8 are on the word links. As we use the phi symbol #0 in the composition, the lower part is accessed only if the partial word sequence in the lattice cannot be matched with the “upper part” of the B graph (the part with discounts).

Results

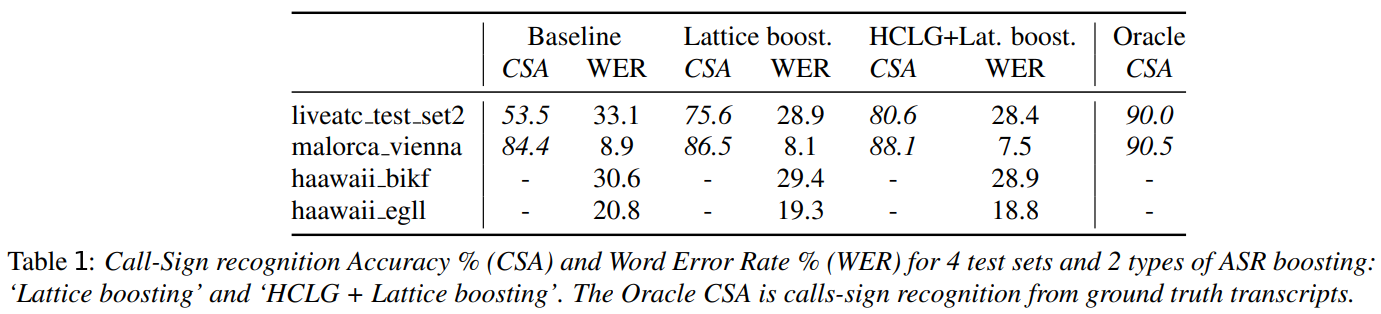

The experiments with HCLG boosting and Lattice boosting are summarized in the paper we submitted to the conference Interspeech 2021. Here, we share the main table from the paper:

The table contains both Word Error Rate results (WER) and Call-Sign Accuracies (CSA). On liveatc_test_set2 we have a huge improvement from 53.5 to 80.6. For malorca_vienna the absolute CSA improvement is smaller, nevertheless the gain from 84.4 to 88.1 removed 60.7% of the gap spanning from baseline to oracle CSA. We also see that Lattice boosting on its own already brings good improvements, and the best results are obtained with the combination of HCLG boosting and Lattice boosting.